You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

Copy file name to clipboardExpand all lines: docs/pulse/read-pulse.md

+18-29

Original file line number

Diff line number

Diff line change

@@ -10,41 +10,36 @@ last_update:

10

10

11

11

## Read Experiment Results

12

12

13

-

To read the results of your experiment, go to the **Results** tab, where you will see your experiment **exposures**, and **Scorecard**. **Scorecard** shows the metric lifts for all Primary and Secondary metrics you set up at experiment creation.

13

+

To read the results of your experiment, go to the **Results** tab, where you will see your experiment hypothesis, **exposures**, and **Scorecard**.

At the top of Pulse is the Exposures Chart. Exposures are the unique experimental units enrolled in the experiment. This is typically the number of unique users, and for device-level experimentation, this is the number of devices. The timeline shows you when the experiment was started, and how many exposures were enrolled on any given day. You can see the rate at which users were added into each group of the experiment, how many total users were exposed, and confirm the target ratio matches what you configured in experiment setup.

19

+

At the top of the Results page is the Exposures Chart. Exposures are the unique experimental units enrolled in the experiment. This is typically the number of unique users, and for device-level experimentation, this is the number of devices. The timeline shows you when the experiment was started, and how many exposures were enrolled on any given day. You can see the rate at which users were added into each group of the experiment, how many total users were exposed, and confirm the target ratio matches what you configured in experiment setup.

20

20

21

-

### "Scorecard"

21

+

### Scorecard

22

+

23

+

The experiment **Scorecard** shows the metric lifts for all Primary and Secondary metrics you set up at experiment creation.

22

24

23

25

#### Immediately Post-experiment Start

24

26

25

-

For up to the first 24 hours after starting your experiment (before our daily Pulse run), the **Scorecard** section, a.k.a. Pulse, is calculated in near real-time (this only applies to Statsig Cloud, for WHN projects you will need to reload pulse on demand or set up a daily schedule). This more real-time Pulse is designed to enable you to confirm that exposures and metrics are being calculated as expected and debug your experiment or gate setup if needed.

27

+

For up to the first 24 hours after starting your experiment (before our daily metric results run), the **Scorecard** section, a.k.a. Pulse, is calculated hourly (this only applies to Statsig Cloud, for WHN projects you will need to reload pulse on demand or set up a daily schedule). This more real-time Pulse is designed to enable you to confirm that exposures and metrics are being calculated as expected and debug your experiment or gate setup if needed.

26

28

27

29

Please note that you should **not** make any experiment decisions based on more real-time Pulse data in this first 24 hour window after experiment start. Experiments should only be called once the experiment has hit target duration, as set by your primary metric(s) hitting experimental power. Read more about target duration [here](/experiments-plus/create-new#target-duration).

28

30

29

-

<imgwidth="1205"alt="Screen Shot 2023-06-19 at 3 29 13 PM"src="https://github.com/statsig-io/docs/assets/101903926/c148deb6-b20d-4f36-af1a-83836d23371b"/>

30

-

31

-

32

31

Given data during this early post-experiment start window is designed for diagnostic, not decision-making purposes, you will notice a few key differences between this Pulse view and the Pulse results that will start showing after daily runs have initiated:

33

32

34

33

- Metric lifts do not have confidence intervals

35

34

- No time-series view of metric trends

36

35

- No projected topline impact analysis

37

36

- No option to apply more advanced statistical tactics, such as CUPED or Sequential Testing

38

37

39

-

<imgwidth="992"alt="Screen Shot 2023-06-19 at 3 32 13 PM"src="https://github.com/statsig-io/docs/assets/101903926/c34d4358-c92d-488f-ae49-d073904065d0"/>

40

-

41

-

42

-

43

38

All of these are available in daily Pulse results, which will start showing in the next daily Pulse run.

44

39

45

40

#### Post-first Day Scorecard

46

41

47

-

<imgwidth="1194"alt="Screen Shot 2023-09-20 at 11 35 05 AM"src="https://github.com/statsig-io/docs/assets/101903926/85fdc847-8d70-4215-a6ad-5a1f2d49c5e7"/>

The Pulse daily run calculates the difference between the comparable randomization groups (eg. test and control) across your company's suite of metrics, and applies a statistical test to the results. You can read more about Statsig's stats engine [here](/stats-engine).

50

45

@@ -75,12 +70,7 @@ There are a few different views to see your Pulse metric lifts, namely:

75

70

- Days since exposure

76

71

77

72

Cumulative results includes a detailed view on hover, where you can additionally view the raw statistics used in the Pulse metric lift calculations, as well as topline impact.

78

-

79

-

<imgwidth="1282"alt="Screen Shot 2023-09-20 at 11 35 16 AM"src="https://github.com/statsig-io/docs/assets/101903926/1509479e-b5c6-416f-8d2a-ac0672165dd1"/>

80

-

81

-

82

-

<imgwidth="1194"alt="Screen Shot 2023-09-20 at 11 35 35 AM"src="https://github.com/statsig-io/docs/assets/101903926/9cdb7294-c979-4e0d-b26c-e20c86794d2b"/>

83

-

73

+

84

74

85

75

### Dimensions

86

76

@@ -90,28 +80,27 @@ There are two ways in which we can breakdown a given Pulse metric - one is by a

90

80

91

81

User Dimensions refer to user level attributes that are either part of the user object you log, or additional metadata that Statsig extracts. Examples of these user attributes could be operating system, country, and region.

92

82

93

-

You can create [custom queries](/pulse/custom-queries) to create queries that _filter on_ or _group by_ available user dimensions. For example, you could "See results for users in the US", or "See results for users using iOS, grouped by their country".

83

+

You can create [custom "explore" queries](/pulse/custom-queries) to _filter on_ or _group by_ available user dimensions. For example, you could "See results for users in the US", or "See results for users using iOS, grouped by their country". Go to the "explore" tab to draft a custom query

Events Dimensions refer to the value or metadata logged as part of a custom event that is used to define the metric. If you want to see Pulse results for a metric broken down by categories that are specific to that metric, [specify the dimension](/metrics/metric-dimensions) you want to break down by in the **value** or **metadata** attributes when you log the source event. For example, when you log a "click" event on your web or mobile application, you may also log the target category using the **value** attribute as shown below. Pulse will automatically generate results for each category in addition to the top level metric.

98

91

99

92

To see the Pulse result breakdowns for all categories within a metric, click on the (+) sign next to the metric.

These settings can be adjusted at any time to view Pulse results with different significance levels.

109

100

110

-

***Apply Bonferroni Correction**: Select this option to apply the correction in experiments with more than one test group. This reduces the probability of Type I errors (false positives) by adjusting the significance level alpha, which will be divided by the number of test variants in the experiment.

101

+

***Apply Benjamini-Hochberg Procedure per Variant**: Select this option to apply the procedure to reduce the probability of false positives by adjusting the significance level for multiple comparisons - [read more here](/stats-engine/methodologies/benjamini–hochberg-procedure).

111

102

***Confidence Interval**: Changes the confidence interval displayed with the metric deltas. Choose lower confidence intervals (e.g.: 80%) when there's higher tolerance for false positives and fast iteration with directional results is preferred over longer/larger experiments with increased certainty.

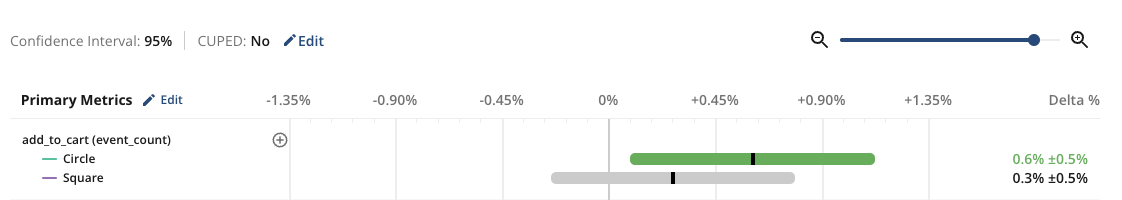

112

103

***CUPED**: Toggle CUPED on/ off via the inline settings above the metric lifts. NOTE- this setting can only be toggled for **Scorecard** metrics, as CUPED is not applied to non-Scorecard metrics.

113

104

***Sequential Testing**: Applies a correction to the calculate p-values and confidence intervals to reduce false positive rates when evaluating results before the target completion date of the experiment. This helps mitigate the increased false positive rate associated with the "peeking problem". Toggle Sequential Testing on/ off via the inline settings above the metric lifts. NOTE- this setting is available only for experiments with a set target duration.

114

-

115

-

0 commit comments